اختبار تكيفي مقابل اختبار عشوائي في الاختبارات السيكوتقنية

In this short article, we will review and compare adaptive testing vs. random testing, covering the advantages and disadvantages of each method.

A brief description of the design mechanism.

Adaptive testing

In an adaptive test, the examinee is presented with a sequence of questions. The next item is selected based on the examinee's answers to the previous one. For example: if an examinee does well in a sequence of questions of medium difficulty, the next questions presented will be more difficult, and conversely, if the examinee fails the medium questions, the next questions presented to him will be easier.

Therefore, an adaptive test initially contains more questions than required, and it is impossible to know in advance how many questions the examinee will receive. As a result, the examinee actually 'jumps' back and forth between the questions, and the number of questions he will face varies from examinee to examinee.

Random testing

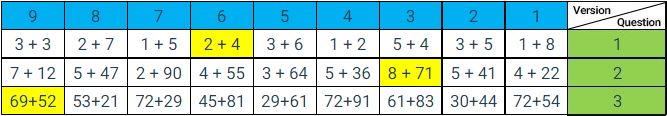

In a random test, the system randomly pulls the questions from different versions with this difficulty level. For example: for an examinee, the first question will be version 6, the second question will be version 3, and the third question will be version 9, while the second examinee will receive question version 2, in the second question version 8 and the third question as it will be version 5, as shown in the table below.

The outcome is that two different examinees will receive completely different questions but at the same difficulty level. As a result, the amount of questions in a random test is predetermined and known in advance, and it is also possible to estimate the duration of the test. Furthermore, the different versions of each question are on the same difficulty level. The system extracts them randomly from the various test versions, so the number of possible combinations is enormous.

In developing the Logipass test system, when we weighed all the considerations, advantages, and disadvantages of developing online tests, we realized that the weak point was mainly revealing the questions to the examinee.

The complexity of a multi-version system and a random question draw.

Every solution we came up with, including adaptive testing, depended on the examinees' sophistication level and ability to share the questions.

Everything known to exist in the world of testing in the Internet age, especially when it comes to repeated use, does not protect the system against the possibility of copying and the fear that the test taker will have on us. Since developing a psycho-technical test system involves significant effort and financial investment, this issue greatly bothered us.

After much consideration and thinking, we realized there is a way, but it is not easy to develop, operate and maintain.

It is about more than just developing a multi-version system. However, more than that - the questions in it will have to be pulled from different versions entirely at random so that each test will be unique with a completely different set of questions, or in other words, a random test.

In our opinion, this is the only way to deal with the level of sophistication of the examinees and the ease with which questions can be copied and studied in a test that is delivered via the Internet, all the more so when it comes to tests that can be taken at home and without supervision. In this way, no matter what the examinee does, how sophisticated he is, whether he will copy or learn the questions, how much help he received or how much he invested in 'spying' and learning the system, including taking screenshots, he cannot know what questions will be given to him.

In addition, when we evaluated the development of a random system vs. an adaptive one, we considered another significant advantage of random testing: the possibility of obtaining information about the examinee's work style.

Since an adaptive system adapts to the examinee, both in terms of the difficulty level of the questions and the length of the entire test, it is impossible to get an impression of many characteristics of his work style and essential attributes for his functioning at work. For example - how does the examinee react to a challenge (persists or gives up?), how does he respond to boredom (persists or gets distracted?), how does he react to failure? How does he perform under pressure when time is running out? In random testing, this information can be collected, saved, and analyzed automatically while the examinee's skills are being tested in the specific test area. We realized that this information about the examinee is worth its weight in gold.

The problem was how? How do we develop a profound, extensive, and correctly normalized system?

We realized that to achieve this, we would have to examine a vast, unimaginable amount of examinees, not 100 nor 1,000, but many thousands for each test and each version.

Moreover, even in the future, after the tests go live, we will have to continue to maintain an attractive pilot system in which voluntary test takers will test willingly and for free, whether to practice, improve or reduce their fears before a test, so that we can change items whenever we want, and create a situation that our system could not be outsmarted.

And that's what we did. We developed a complex random system of questions and answers in which the rule of randomness partially applies, that is, to the answers. How? by mixing up the location and numbering of the answers so that even if a particular examinee gets the same question, the chance that the location of the correct answer will be the same as the last time that question was presented to him is 25% at most.

Combined, these two elements give even the most sophisticated examinee a feeling of insecurity and lessen his motivation to try cheating.

An example of three questions in a random calculus test:

Here We have nine versions (the minimum in most of our tests) and three sample questions of increasing difficulty levels. The items marked in yellow are an example of a 3-question test random draw.

There is nothing special about the items themselves individually. The beauty and also the difficulty lies in their arrangement, management, and random draw system.

Of course, in such a situation, it is not possible to try and pre-learn all the items (there are 25 questions with nine different versions, and this is only one test out of a set of tests).

If the examinee holds the ability to memorize all the combinations, he is probably a genius, a true genius, and a genius can pass the tests without needing to cheat.

We considered the possibility of using both methods: adaptive tests that are also random so that the number of questions in the sole version will be immense, and the items will be drawn randomly. However, we realized that beyond the expected consequences of mixing the methods, the system would suffer from all the shortcomings of an adaptive system; please see the comparison table below.

Back to the random option - we realized that such a system requires technological capabilities that still need to be created in our worlds and requires a large team of programmers that do not fall in importance to the psychological team. Furthermore, developing such a system will require us to be very careful and use accurate documentation and creative solutions so that the level of performance and retrieval of the information in the reports Is fast and, at the same time, precise and up-to-date.

Such a system should be able to make changes in hindsight, preserve the details of the questions the examinee received, the order of the answers and the distractors, and all this without detracting from the employers the possibility of changing the norms they chose in the position's profile, the level of importance they attributed to specific tests, to create a different mix of tests, or allow the examinee to retake the exam.

In addition to all of the above, we had to bear in mind that this is a multilingual and multicultural system, which made us realize that developing and maintaining such a system is a highly complex task, but this is the right way to accomplish maximum effectiveness.

Random testing gives employers what is most important to them - peace and security to allow candidates to be tested at home, understanding that the test taker cannot know what questions they will receive, even if we have tested him in the past, all at the same time as collecting information about his work style.

Comparison table: adaptive vs. random test

| Parameter | Adaptive Test | Random Test |

|---|---|---|

| Item reveal | Copying items and learning them is possible, especially the first items that dictate the rest of the exam and the final grade. | The greater the number of versions, the more complex the task of memorizing the questions becomes, next to impossible. |

| Re-examination | A candidate tested in an adaptive system for the second time will also receive most of the questions he received the first time. | It allows for testing a candidate multiple times without fear of foul play. The chance of question repetition is negligible, combined with shuffling answer locations, making cheating extremely difficult. |

| Testing multiple examinees simultaneously | The ability to copy from each other is less convenient than in a standard test, but only slightly, because the initial questions are the same, and only if the answer differ some of the following questions will be different. | There is no harm in testing multiple examinees simultaneously since each of them will receive a completely different test. |

| Work style | It is impossible to get an impression of the examinee's work style. The examinees are not on the same 'bar,' so a comparison is impossible. | It is possible to collect and analyze many essential characteristics related to the work style of the examinees and compare them. For example - dealing with difficulty, dealing with pressure, and more. |

| Test duration | Not comparable, the exam time may differ from examinee to examinee, even in cases of re-examining the same candidate. | The maximum exam duration is known in advance and is the same for all examinees in each specific test. |

| Performance speed | It is not possible to compare examinees. The number of items and the duration of the exam is not predetermined. | Data can be collected about a specific candidate for the entire assessment and compared to other examinees for each test. |

| Answer correction | It is impossible to go back and correct; such a correction disrupts the algorithm underlying the test, which determines the next question. | The candidate can go back and correct his answers within the time limitations of the test. This is another form of data that can be collected to benefit diagnostic conclusions about the test taker. |

Conclusion

A system where the items are randomly pulled from different versions is already a professional and technological challenge on a completely different level. The number of participants in the Survey must be massive. The investment in QA, professional aspects (psychologists), and the technical team (programmers) is costly compared to a single-version system; however long it may be. Nevertheless, to our understanding, this is the best way to provide a complete and durable solution to the issue of copying/studying and 'burning' the test details.

In the age of the Internet and examining candidates from home, this is the best and safest way to give recruiters what is most important to them - stability and reliability of the test results. In addition, we recommend adding the ability to photograph the examinee during the test as a deterrent factor (our system offers this feature).

There are additional advantages such as the ability to examine many candidates at the same time as each is given its unique test, the possibility to compare the examinees in different contexts such as performance speed and conduct during the test, the ability to identify personality characteristics derived from skill testing such as work style, persistence, impulsiveness, hesitation and more, is essential to a great extent as many of our clients have reported to us.

References:

Rudner, L. M., & Guo, F. (2011). Computer adaptive testing for small scale programs and instructional systems. Journal of Applied Testing Technology, 12(1), 6-10.

Wainer, H., Dorans, N. J., Flaugher, R., Green, B. F., & Mislevy, R. J. (2000). Computerized adaptive testing: A primer. Routledge.

Weiss, D. J., & Kingsbury, G. G. (1984). Application of computerized adaptive testing to educational problems. Journal of educational measurement, 21(4), 361-375.

يعمل أوفير كمدير الفريق المهني في LogiPass منذ بداية عام 2023. انضم أوفير إلى LogiPass ولديه معرفة وخبرة في البحث والتطوير في أنظمة الفرز المحوسبة المختلفة، مع خبرة عدة سنوات في فرز وتقييم المرشحين لمختلف المناصب في جيش الدفاع الإسرائيلي. عمل أوفير أيضًا في شركة Actiview ومعهد آدم ميلو قبل انضمامه إلى الشركة.

سنكون سعداء بسماع رأيك!

لدى LogiPass عشرات المقالات الإضافية التي تتناول قضايا اختبارات الفرز، التوجيه المهني وقضايا أخرى مثيرة للاهتمام من عالم العمل.